使用kubeadm部署Kubernetes 1.18.0 集群实践记录

Kubeadm

Kubeadm是一种工具,旨在为创建Kubernetes集群提供最佳实践的“快速路径”,它以用户友好的方式执行必要的操作,以使可以最低限度的可行,安全的启动并运行群集。只需将kubeadm,kubelet,kubectl安装到服务器,其他核心组件以容器化方式快速部署。

kubeadm地址:https://github.com/kubernetes/kubeadm

参考文档地址:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/

- 常见的cmd

kubeadm init 启动一个 Kubernetes 主节点

kubeadm join 启动一个 Kubernetes 工作节点并且将其加入到集群

kubeadm upgrade 更新一个 Kubernetes 集群到新版本

kubeadm config 如果你使用 kubeadm v1.7.x 或者更低版本,你需要对你的集群做一些配置以便使用 kubeadm upgrade 命令

kubeadm token 使用 kubeadm join 来管理令牌

kubeadm reset 还原之前使用 kubeadm init 或者 kubeadm join 对节点产生的改变

kubeadm version 打印出 kubeadm 版本

kubeadm alpha 预览一组可用的新功能以便从社区搜集反馈

- 成熟度

| Area | Maturity Level |

|---|---|

| Command line UX | GA |

| Implementation | GA |

| Config file API | beta |

| CoreDNS | GA |

| kubeadm alpha subcommands | alpha |

| High availability | alpha |

| DynamicKubeletConfig | alpha |

| Self-hosting | alpha |

- Master节点

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 6443* | Kubernetes API server | All |

| TCP | Inbound | 2379-2380 | etcd server client API | kube-apiserver, etcd |

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 10251 | kube-scheduler | Self |

| TCP | Inbound | 10252 | kube-controller-manager | Self |

- node节点

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 30000-32767 | NodePort Services† | All |

前置准备

系统准备

- 开放端口:

开放Kubernetes各个组件所需要的端口,可以参考上文所展示的端口范围进行设置

# 查看已开放的端口(默认不开放任何端口)

firewall-cmd --list-ports

# 开启80端口

firewall-cmd --zone=public(作用域) --add-port=10250/tcp(端口和访问类型) --permanent(永久生效)

firewall-cmd --zone=public --add-port=10250/tcp --permanent

# 重启防火墙

firewall-cmd --reload

# 停止防火墙

systemctl stop firewalld.service

# 禁止防火墙开机启动

systemctl disable firewalld.service

- 禁用SELINUX:

setenforce 0

vi /etc/selinux/config

# 设置SELINUX=disabled

- bridge设置

touch /etc/sysctl.d/k8s.conf

# 添加如下内容

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

# 执行命令

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

- 关闭系统swap

# 关闭swap分区

swapoff -a

# 修改配置文件 - /etc/fstab 注释掉如下行

/mnt/swap swap swap defaults 0 0

# 调整 swappiness 参数

vim /etc/sysctl.conf # 永久生效

# 修改 vm.swappiness 的修改为 0

Kube-proxy开启ipvs设置

为kube-proxy开启ipvs的前提需要加载以下的内核模块:

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

touch /etc/sysconfig/modules/ipvs.modules

# 添加如下脚本,保证在节点重启后能自动加载所需模块

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

# 启用并查看是否已经正确加载所需的内核模块

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

安装ipset软件包与ipvsadm管理工具

yum -y install ipset

yum -y install ipvsadm

安装并设置docker

Kubernetes从1.6开始使用CRI(Container Runtime Interface)容器运行时接口。默认的容器运行时仍然是Docker,使用的是kubelet中内置dockershim CRI实现。

- 安装docker

# 更新yum包

sudo yum update

# 安装需要的软件包

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

# 设置yum源

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 可以查看所有仓库中所有docker版本,并选择特定版本安装

yum list docker-ce --showduplicates | sort -r

# 安装docker

sudo yum install docker-ce

sudo yum install <FQPN docker-ce.x86_64 3:19.03.5-3.el7>

# 启动并加入开机启动

sudo systemctl start docker

sudo systemctl enable docker

# 镜像加速

vim /etc/docker/daemon.json

## 加入镜像地址

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://hub-mirror.c.163.com",

"https://registry.aliyuncs.com",

"https://registry.docker-cn.com",

"https://docker.mirrors.ustc.edu.cn"

]

}

## 重启服务

sudo systemctl daemon-reload

sudo systemctl restart docker

- 修改docker cgroup driver为systemd

对于使用systemd作为init system的Linux的发行版,使用systemd作为docker的cgroup driver可以确保服务器节点在资源紧张的情况更加稳定

# 创建或修改/etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

# 重启docker

sudo systemctl daemon-reload

sudo systemctl restart docker

安装kubeadm,kubectl和kubelet

- Centos安装

# 添加 kubernetes.repo

vim /etc/yum.repos.d/kubernetes.repo

# 写入如下信息后保存

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# 使用yum makecache 生成缓存

yum makecache fast

# 查看kubeadm版本

yum list kubelet kubeadm kubectl --showduplicates|sort -r

# 安装kubeadm

yum install -y kubelet kubeadm kubectl

# 安装指定版本kubeadm

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

kubeadm:用于初始化 Kubernetes 集群

kubectl:Kubernetes 的命令行工具,主要作用是部署和管理应用,查看各种资源,创建,删除和更新组件

kubelet:主要负责启动 Pod 和容器

Kubeadm部署kubernetes集群

Kubeadm Init 配置kubernetes Master节点

- 设置开机启动kubelet服务

systemctl enable kubelet.service

- 导出配置文件并修改

# 导出配置文件

kubeadm config print init-defaults --kubeconfig ClusterConfiguration > /data/kubeadm/config/kubeadm.yml

# 修改配置文件

vim kubeadm.yml

# 修改内容如下

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

# 修改为主节点 IP

advertiseAddress: 192.168.1.102

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: localhost.localdomain

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager:

extraArgs:

horizontal-pod-autoscaler-use-rest-clients: "true"

horizontal-pod-autoscaler-sync-period: "10s"

node-monitor-grace-period: "10s"

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

# 修改registry为阿里云

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

# 修改Kubernetes版本号

kubernetesVersion: v1.18.0

networking:

dnsDomain: cluster.local

# 配置Calico 的默认网段

podSubnet: "172.16.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

# 开启 IPVS 模式

apiVersion: kubeadm.k8s.io/v1beta2

kind: KubeProxyConfiguration

featureGates:

supportipvsproxymodedm.ymlvim kubeadm.yml: true

mode: ipvs

- 查看并拉取镜像

# 查看镜像

kubeadm config images list --config /data/kubeadm/config/kubeadm.yml

# 拉取镜像

kubeadm config images pull --config /data/kubeadm/config/kubeadm.yml

- 配置kubernetes master节点

kubeadm init --config=/data/kubeadm/config/kubeadm.yml --upload-certs | tee /data/kubeadm/log/kubeadm-init.log

--upload-certs 参数:可以在后续执行加入节点时自动分发证书文件

tee kubeadm-init.log参数: 用以输出日志

- Kubeadm init 执行过程

执行init操作的时候可以看到日志如下:

W0601 11:33:16.858211 1719 strict.go:47] unknown configuration schema.GroupVersionKind{Group:"kubeadm.k8s.io", Version:"v1beta2", Kind:"KubeProxyConfiguration"} for scheme definitions in "k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/scheme/scheme.go:31" and "k8s.io/kubernetes/cmd/kubeadm/app/componentconfigs/scheme.go:28"

W0601 11:33:16.858535 1719 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[config] WARNING: Ignored YAML document with GroupVersionKind kubeadm.k8s.io/v1beta2, Kind=KubeProxyConfiguration

[init] Using Kubernetes version: v1.18.0

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [localhost.localdomain kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.102]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost.localdomain localhost] and IPs [192.168.1.102 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost.localdomain localhost] and IPs [192.168.1.102 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

W0601 11:33:33.405533 1719 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0601 11:33:33.411476 1719 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 31.511863 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

ca23402e2e70c5613b2ee10507b6065a548bb715f992c335e6498f25d30c0f96

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.102:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2d14d0998d3d2921771e6c6a81477b5124d87f920b7c4caeec8ebefe3c94fe5b

执行过程关键内容:

[kubelet-start] 生成kubelet的配置文件”/var/lib/kubelet/config.yaml”

[certificates] 生成相关的各种证书

[kubeconfig] 生成 KubeConfig 文件,存放在 /etc/kubernetes 目录中,组件之间通信需要使用对应文件

[control-plane] 使用 /etc/kubernetes/manifest 目录下的 YAML 文件,安装 Master 组件

[etcd] 使用 /etc/kubernetes/manifest/etcd.yaml 安装 Etcd 服务

[kubelet] 使用 configMap 配置 kubelet

[patchnode] 更新 CNI 信息到 Node 上,通过注释的方式记录

[mark-control-plane] 为当前节点打标签,打了角色 Master,和不可调度标签,默认就不会使用 Master 节点来运行 Pod

[bootstrap-token] 生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

[addons] 安装附加组件 CoreDNS 和 kube-proxy

- 配置Kubectl

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# 非 ROOT 用户执行

chown $(id -u):$(id -g) $HOME/.kube/config

验证

kubectl get node

# 结果

NAME STATUS ROLES AGE VERSION

kubernetes-master NotReady master 92m v1.18.0

Kubeadm Join 配置kubernetes Slave节点

将 slave 节点加入到集群中,只需要在 slave 服务器上安装 kubeadm,kubectl,kubelet 三个工具,然后使用 kubeadm join 命令加入

- 配置kubernetes slave节点

kubeadm join 192.168.1.120:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:4133848ddc81242c50c95b684be0fa049e63362b1af542a49d9c31a65c2b138b

# 结果

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

- 验证

kubectl get nodes

# 结果

NAME STATUS ROLES AGE VERSION

kubernetes-slave1 NotReady <none> 5m14s v1.18.0

kubernetes-master NotReady master 92m v1.18.0

配置网络插件

- 关于容器网络

容器网络是容器选择连接到其他容器、主机和外部网络的机制。容器的 runtime 提供了各种网络模式,以Docker为例子,Docker 默认情况下可以为容器配置以下网络:

none: 将容器添加到一个容器专门的网络堆栈中,没有对外连接。

host: 将容器添加到主机的网络堆栈中,没有隔离。

default bridge: 默认网络模式。每个容器可以通过 IP 地址相互连接。

自定义网桥: 用户定义的网桥,具有更多的灵活性、隔离性和其他便利功能。

- CNI

CNI(Container Network Interface)是CNCF旗下的一个项目,由一组用于配置Linux容器的网络接口的规范和库组成,同时还包含了一些插件。CNI仅关心容器创建时的网络分配,和当容器被删除时释放网络资源。

CNI 的初衷是创建一个框架,用于在配置或销毁容器时动态配置适当的网络配置和资源。插件负责为接口配置和管理 IP 地址,并且通常提供与 IP 管理、每个容器的 IP 分配、以及多主机连接相关的功能。容器运行时会调用网络插件,从而在容器启动时分配 IP 地址并配置网络,并在删除容器时再次调用它以清理这些资源。

运行时或协调器决定了容器应该加入哪个网络以及它需要调用哪个插件。然后,插件会将接口添加到容器网络命名空间中,作为一个 veth 对的一侧。接着,它会在主机上进行更改,包括将 veth 的其他部分连接到网桥。再之后,它会通过调用单独的 IPAM(IP地址管理)插件来分配 IP 地址并设置路由。

在 Kubernetes 中,kubelet 可以在适当的时间调用它找到的插件,为通过 kubelet 启动的 pod进行自动的网络配置。

Kubernetes 中可选的 CNI 插件如下:

- Flannel

- Calico

- Canal

- Weave

- 安装calico

kubectl apply -f https://docs.projectcalico.org/v3.8/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

- 验证

watch kubectl get pods --all-namespaces

Kube-proxy开启ipvs

- 修改配置文件

修改ConfigMap的kube-system/kube-proxy中的config.conf,mode: "ipvs"

kubectl edit cm kube-proxy -n kube-system

- 重启各个节点上的kube-proxy pod

kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

- 验证

kubectl logs kube-proxy- -n kube-system

Kubectl 部署Nginx

- 检测组件运行状态

kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

- 检测master与slave节点状态

kubectl cluster-info

kubectl get nodes

- YAML配置文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

这里的yaml配置文件,对应到 Kubernetes 中,就是一个 API Object(API 对象)。将配置文件提交给Kubernetes后, Kubernetes 就会负责创建出这些对象所定义的容器或者其他类型的 API 资源。

Kind 字段:指定了这个 API 对象的类型(Type)为 Deployment。

Pod 模版(spec.template):定义一个 Pod 模版(spec.template), Pod 包含一个容器,容器的镜像(spec.containers.image)是 nginx:1.7.9,容器监听端口(containerPort)是 80

Metadata 字段:设置标识,Labels 字段主要用于 Kubernetes 过滤对象

一个 Kubernetes 的 API 对象的定义,可以分为 Metadata 和 Spec 两个部分。前者存放的是这个对象的元数据;而后者存放属于这个对象独有的定义,用来描述它所要表达的功能。

这里使用一种 API 对象(Deployment)管理另一种 API 对象(Pod)的方法,在 Kubernetes 中叫作“控制器”模式(controller pattern)。Deployment 是 Pod 的控制器的角色。

- 相关指令

# 运行

kubectl create -f nginx-deployment.yaml

# 更新

kubectl replace -f nginx-deployment.yaml

kubectl apply -f nginx-deployment.yaml

kubectl edit -f nginx-deployment.yaml

# 删除

kubectl delete -f nginx_deployment.yml

# 进入pod

kubectl exec -it nginx-deployment-cc7df4f8f-nlcn8

# 查看对应的pod状态

kubectl get pods -l app=nginx

# 查看Pod 的详细信息

kubectl describe pod nginx-deployment-cc7df4f8f-nlcn8

- 映射服务

# 映射Nginx服务80端口

kubectl expose deployment nginx-deployment --port=80 --type=LoadBalancer

# 查看已发布服务

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7h55m

nginx-deployment LoadBalancer 10.103.3.26 <pending> 80:30626/TCP 18s

# 可以通过访问http://ip:30626/ 访问nginx页面

Kubernetes常用组件部署

Helm

- 关于Helm与chart

Helm是一个 Kubernetes 应用的包管理工具,用来管理 char——预先配置好的安装包资源,类似于 Ubuntu 的 APT 和 CentOS 中的 YUM。

Helm chart 是用来封装 Kubernetes 原生应用程序的 YAML 文件,用于在部署应用的时候自定义应用程序的一些 metadata,便于应用程序的分发。

- Chart相关概念

一个 Chart 是一个 Helm 包,它包含在 Kubernetes 集群内部运行应用程序,工具或服务所需的所有资源定义。可以把它想像为一个自制软件,一个 Apt dpkg 或一个 Yum RPM 文件的 Kubernetes 环境里面的等价物。

一个 Repository 是 Charts 收集和共享的地方,类似于包管理中心。

一个 Release 是处于 Kubernetes 集群中运行的 Chart 的一个实例。一个 chart 通常可以多次安装到同一个群集中。每次安装时,都会创建一个新 release 。

- Helm相关概念

Helm 将 charts 安装到 Kubernetes 中,每个安装创建一个新 release 。要找到新的 chart,可以搜索 Helm charts 存储库 repositories。

-

Helm与chart作用

- 应用程序封装

- 版本管理

- 依赖检查

- 便于应用程序分发

-

Helm安装

Helm由客户端命helm令行工具和服务端tiller组成,下载helm命令行工具到master节点node1的/usr/local/bin下进行安装

wget http://storage.googleapis.com/kubernetes-helm/helm-v2.15.1-linux-amd64.tar.gz

tar -zxvf helm-v2.15.1-linux-amd64.tar.gz

cd linux-amd64/

cp helm /usr/local/bin/

Helm 的服务器端部分 Tiller 通常运行在 Kubernetes 集群内部。因为Kubernetes APIServer开启了RBAC访问控制,所以需要创建tiller使用的service account: tiller并分配合适的角色给它。 通过查看helm文档中的Role-based Access Control。 简单的直接分配cluster-admin这个集群内置的ClusterRole给它,rbac-config.yaml文件如下所示:

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

spec:

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

# 运行

kubectl create -f rbac-config.yaml

# 结果

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

使用helm部署tiller

# tiller镜像地址修改为可用的,可以通过docker search tiller查看可用镜像,charts repo地址修改为国内源

helm init --upgrade -i sapcc/tiller:v2.15.1 --service-account=tiller --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

查看运行状况

# tiller默认被部署在k8s集群中的kube-system的helm下

kubectl get pods -n kube-system -l app=helm

NAME READY STATUS RESTARTS AGE

tiller-deploy-6bdbf9884d-pstx4 1/1 Running 0 5m43s

- Helm常用命令

# 查看版本

helm version

# 查看当前安装的charts

helm list

# 查询 charts

helm search nginx

# 下载远程安装包到本地

helm fetch rancher-stable/rancher

# 查看package详细信息

helm inspect chart

#安装charts

helm install --name nginx --namespaces prod bitnami/nginx

# 查看charts状态

helm status nginx

# 删除charts

helm delete --purge nginx

# 增加repo

helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

helm repo add --username admin --password password myps https://harbor.pt1.cn/chartrepo/charts

# 更新repo仓库资源

helm repo update

# 创建charts

helm create helm_charts

# 测试charts语法

helm lint

# 打包charts

cd helm_charts && helm package ./

# 查看生成的yaml文件

helm template helm_charts-0.1.1.tgz

# 更新image

helm upgrade --set image.tag=‘v201908‘ test update myharbor/study-api-en-oral

# 回滚relase

helm rollback nginx

使用Helm部署Ingress-nginx

- 部署Ingress-nginx,可以方便地将集群中的服务暴露到集群外部,从集群外部访问,使用helm部署操作如下

# 查询相关的Charts

helm search stable/nginx-ingress

# 下载远程安装包到本地

helm fetch stable/nginx-ingress

tar -xvf nginx-ingress-1.40.2.tgz

- 修改values.yaml配置如下

# 1. 修改repository 地址

name: controller

image:

repository: siriuszg/nginx-ingress-controller

tag: "0.33.0"

pullPolicy: IfNotPresent

runAsUser: 101

allowPrivilegeEscalation: true

# ......

# Required for use with CNI based kubernetes installations (such as ones set up by kubeadm),

# since CNI and hostport don't mix yet. Can be deprecated once https://github.com/kubernetes/kubernetes/issues/23920

# is merged

# 2. 设置nginx ingress controller使用宿主机网络,设置hostNetwork为true

hostNetwork: true

dnsConfig: {}

dnsPolicy: ClusterFirst

reportNodeInternalIp: false

## Use host ports 80 and 443

# 3. 使用主机端口打开

daemonset:

useHostPort: true

hostPorts:

http: 80

https: 443

# ......

# 4. 因为使用的是hostnetwork的方式,因此不创建service,这里设置enabled为false

service:

enabled: false

annotations: {}

labels: {}

# ......

- 安装Chart

helm install --name nginx-ingress -f nginx-values.yaml . --namespace kube-system

- 验证

# 查看nginx-ingress-controller 部署到的ip,访问http://192.168.1.121返回default backend,则部署完成

kubectl get pod -n kube-system -o wide

使用Helm部署kubernetes-dashboard

- 创建/安装tls secret

openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout ./tls.key -out ./tls.crt -subj "/CN=192.168.1.121"

kubectl -n kube-system create secret tls dashboard-tls-secret --key ./tls.key --cert ./tls.crt

# 查看

kubectl get secret -n kube-system |grep dashboard

- helm下载kubernetes-dashboard

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

helm search kubernetes-dashboard/kubernetes-dashboard

helm fetch kubernetes-dashboard/kubernetes-dashboard

tar -xvf kubernetes-dashboard-2.2.0.tgz

cp values.yaml dashboard-chart-2.yaml

- 自定义chart文件

image:

repository: kubernetesui/dashboard

tag: v2.0.3

pullPolicy: IfNotPresent

pullSecrets: []

replicaCount: 1

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: 'true'

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

hosts:

- lhr.dashboard.com

tls:

- secretName: dashboard-tls-secret

hosts:

- lhr.dashboard.com

paths:

- /

metricsScraper:

enabled: true

image:

repository: kubernetesui/metrics-scraper

tag: v1.0.4

resources: {}

containerSecurityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

rbac:

create: true

clusterRoleMetrics: true

serviceAccount:

create: true

name: dashboard-admin

livenessProbe:

initialDelaySeconds: 30

timeoutSeconds: 30

podDisruptionBudget:

enabled: false

minAvailable:

maxUnavailable:

containerSecurityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

networkPolicy:

enabled: false

- chart安装

# 安装

helm install --name kubernetes-dashboard-2 -f dashboard-chart.yaml . --namespace kube-system

# 更新配置

helm upgrade kubernetes-dashboard-2 -f dashboard-chart.yaml . --namespace kube-system

- token获取

# 新增token获取脚本

vim dashboard-token.sh

# 如下所示

#!/bin/sh

TOKENS=$(kubectl describe serviceaccount dashboard-admin -n kube-system | grep "Tokens:" | awk '{ print $2}')

kubectl describe secret $TOKENS -n kube-system | grep "token:" | awk '{ print $2}'

# 设置别名

vim ~/.bashrc

alias k8s-dashboard-token="sh /data/chart/dashboard/kubernetes-dashboard/dashboard-token.sh"

source ~/.bashrc

使用Helm部署metrics-server

metrics-server是kubernetes的监控组件,可以配合kubernetes-dashboard使用

- helm下载metrics-server

helm search stable/metrics-server

helm fetch stable/metrics-server

tar -xvf metrics-server-2.11.1.tgz

- 自定义chart文件

rbac:

create: true

pspEnabled: true

serviceAccount:

create: true

name: metircs-admin

apiService:

create: true

hostNetwork:

enabled: false

image:

repository: mirrorgooglecontainers/metrics-server-amd64

tag: v0.3.6

pullPolicy: IfNotPresent

replicas: 1

args:

- --logtostderr

- --kubelet-insecure-tls=true

- --kubelet-preferred-address-types=InternalIP

livenessProbe:

httpGet:

path: /healthz

port: https

scheme: HTTPS

initialDelaySeconds: 20

readinessProbe:

httpGet:

path: /healthz

port: https

scheme: HTTPS

initialDelaySeconds: 20

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop: ["all"]

readOnlyRootFilesystem: true

runAsGroup: 10001

runAsNonRoot: true

runAsUser: 10001

service:

annotations: {}

labels: {}

port: 443

type: ClusterIP

podDisruptionBudget:

enabled: false

minAvailable:

maxUnavailable:

- Chart安装

helm install --name metrics-server -f metrics-chart.yaml . --namespace kube-system

helm upgrade metrics-server -f metrics-chart.yaml . --namespace kube-system

- 查看相关指标

# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

kubernetes-master 396m 19% 1189Mi 68%

kubernetes-slave 194m 19% 746Mi 42%

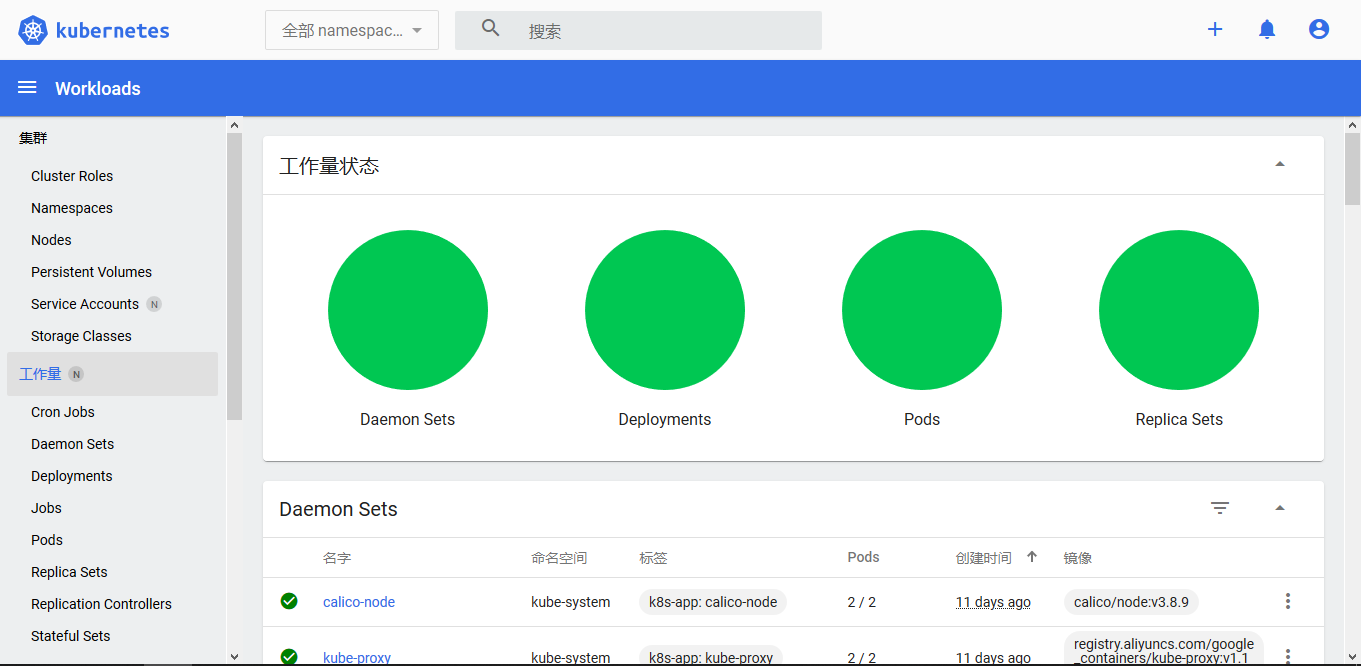

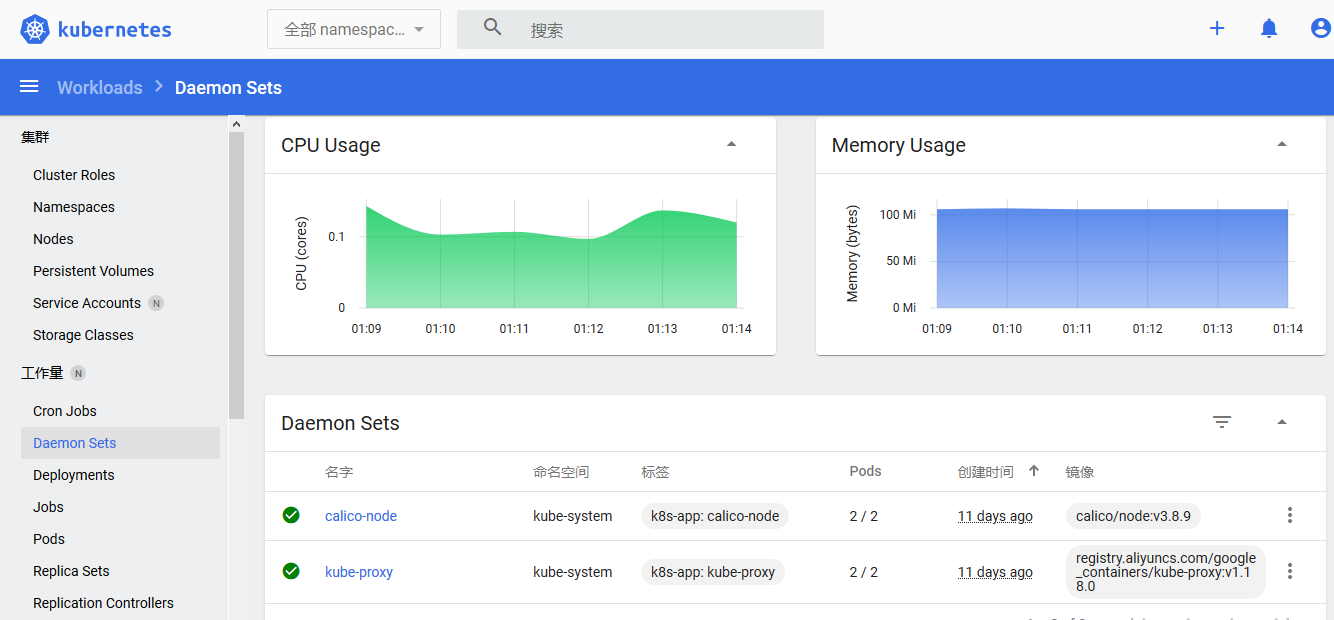

- 效果图

FAQ

- Kubernetes的slave节点上执行kubectl命令出现错误:The connection to the server localhost:8080 was refused - did you specify the right host or port?

出现这个问题的原因是kubectl命令需要使用kubernetes-admin来运行,需要将主节点中的/etc/kubernetes/admin.conf文件拷贝到从节点相同目录下,然后配置环境变量

# 拷贝内容

vim /etc/kubernetes/admin.conf

# 配置环境变量

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

- helm list命令出现 Error: Get https://10.96.0.1:443/api/v1/namespaces/kube-system/configmaps?labelSelector=OWNER%!D(MISSING)TILLER: dial tcp 10.96.0.1:443: i/o timeout

出现这个问题的原有是网络组件(比如Calico)的IP_POOL和宿主机所在的局域网IP段冲突了。这里我们使用的是Calico,可以通过calicoctl修改Calico插件的IP池。

calicoctl允许您从命令行创建、读取、更新和删除Calico对象。可以参考相关的文档:https://docs.projectcalico.org/introduction/

1-安装calicoctl到kubernetes的master节点上

cd /usr/local/bin

curl -O -L https://github.com/projectcalico/calicoctl/releases/download/v3.8.2/calicoctl

chmod +x calicoctl

2-安装 calicoctl 为 Kubernetes pod,设置alias

kubectl apply -f https://docs.projectcalico.org/manifests/calicoctl.yaml

alias calicoctl="kubectl exec -i -n kube-system calicoctl -- /calicoctl"

3-变更IP池

# 查看ip池

calicoctl get ippool -o wide

NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR

default-ipv4-ippool 192.168.0.0/16 true Always Never false all()

# 添加新的ip池

calicoctl create -f -<<EOF

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: b-ipv4-pool

spec:

cidr: 172.16.0.0/16

ipipMode: Always

natOutgoing: true

EOF;

# 再次查看ip池

calicoctl get ippool -o wide

NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR

b-ipv4-pool 172.16.0.0/16 true Always Never false all()

default-ipv4-ippool 192.168.0.0/16 true Always Never false all()

# 禁用旧的IP池

calicoctl get ippool -o yaml > /data/calico/pools.yaml

vim /data/calico/pools.yaml

apiVersion: projectcalico.org/v3

items:

- apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

creationTimestamp: "2020-07-01T14:32:18Z"

name: b-ipv4-pool

resourceVersion: "299739"

uid: f908d360-3477-4309-a207-f79ac5750b14

spec:

blockSize: 26

cidr: 172.16.0.0/16

ipipMode: Always

natOutgoing: true

nodeSelector: all()

vxlanMode: Never

- apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

creationTimestamp: "2020-06-26T17:14:39Z"

name: default-ipv4-ippool

resourceVersion: "3819"

uid: 84b1ab4d-7236-4ff4-8e83-597a51856c21

spec:

blockSize: 26

cidr: 192.168.0.0/16

ipipMode: Always

natOutgoing: true

disabled: true # 设置disabled 为true

nodeSelector: all()

vxlanMode: Never

kind: IPPoolList

metadata:

resourceVersion: "299994"

# 执行操作,变更应用

calicoctl apply -f - < pools.yaml

calicoctl get ippool -o wide

NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR

b-ipv4-pool 172.16.0.0/16 true Always Never false all()

default-ipv4-ippool 192.168.0.0/16 true Always Never true all()

# 重启tiller pod/所有pod

kubectl -n kube-system delete pods tiller-deploy-6bdbf9884d-qz978

kubectl -n [命名空间] delete pods --all

# 删除旧的ip池

calicoctl delete pool default-ipv4-ippool

- 解决k8s.gcr.io被墙问题

由于官方镜像地址被墙,所以我们需要首先获取所需镜像以及它们的版本。然后从国内镜像站获取。

# 获取镜像列表

kubeadm config images list

从阿里云获取镜像,通过docker tag命令来修改镜像的标签

images=(

kube-apiserver:v1.12.1

kube-controller-manager:v1.12.1

kube-scheduler:v1.12.1

kube-proxy:v1.12.1

pause:3.1

etcd:3.2.24

coredns:1.2.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

- Chart镜像版本过低问题

$ helm search kubernetes-dashboard

NAME CHART VERSION APP VERSION DESCRIPTION

stable/kubernetes-dashboard 0.6.0 1.8.3 General-purpose web UI for Kubernetes clusters

$ helm repo add stable http://mirror.azure.cn/kubernetes/charts/

"stable" has been added to your repositories

$ helm search kubernetes-dashboard

NAME CHART VERSION APP VERSION DESCRIPTION

stable/kubernetes-dashboard 1.11.1 1.10.1 DEPRECATED! - General-purpose web UI for Kubernetes clusters

- kubeadm token过期问题

# 生成一条永久有效的token

kubeadm token create --ttl 0

# 获取ca证书sha256编码hash值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

# node节点加入

kubeadm join 192.168.1.120:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:4133848ddc81242c50c95b684be0fa049e63362b1af542a49d9c31a65c2b138b